How We Do Technical SEO

Discuss And Fix Goals And Objectives

The first step would be to understand your marketing process properly. This is where we fix targets and how to accomplish them. As our client, you will be heavily involved in this step.

Initial Detailed Keyword Analysis

Proper keyword analysis forms the basis of all SEO processes for business. We analyze before we fix the right keywords. The more time we spend on keywords research, the better long-term results will be.

Identifying Niche-Related Metadata

A critical insight to creating an SEO funnel is highlighting the right metadata. Creating a niche-relevant metadata framework is important for your success. We focus on high-ticket data that can guarantee results.

Send Acquired Data To Client For Approval

Once we’ve discussed the data, we move forward with the SEO process. Once we’ve gathered all the data, we send it across to you, the client. The data we acquire is added to a secure repository for your reference.

Commence SEO Pipeline

The acquired data and client feedback is processed, and the process is started. Specialist teams are assigned to your project depending on skill levels. We also make use of cross-functional teams to improve your marketing processes.

Optimize On-Page And Off-Page Data

A vital step in the SEO pipeline is to optimize on and off-page elements. While on-page elements are carried out most of the time, off-page is usually neglected. Our team delivers powerful off-page SEO results through various channels.

Implement SEO Processes

Once all the data gathering and analysis are completed, the implementation phase begins. We take care of any complications that can arise during this process. Our experts can effectively execute SEO processes on any scale.

Detailed Reporting And Recommendations

Once the implementation phase is over, we focus on detailed reporting of our findings. During this phase, we highlight our advice for your SEO. We also discuss how you can get the most out of your marketing.

Benefits of Technical SEO

Improves Performance On SERPs

High-performance technical SEO helps your business stay ahead of your rivals. We help you match user queries to searches much better. It also improves content relevance which increases rankings across all niche-related keyword profiles.

Increases Coverage Across All Channels

Brand reach is an important metric to retain relevance and awareness. Technical SEO can help improve your coverage through different marketing channels. This includes search results, social media, email, and direct selling, to name a few.

Increases Rich Snippet Options

While the first rank is a coveted place on searches, there’s something that is even more valuable than that. Rich snippets feature higher than rank #1. It enables “position-zero” search results for your brand.

Improves Quality Of Leads

For any business to thrive, it needs a reliable way to increase leads. Opting for better SEO improves your quality of leads. This means much more business for your brand with little increase in spending.

Increases Audience Engagement

Today, engagement is one of the most critical parts of a marketing campaign. The more engaged your brand is with users, the more buzz you get on the internet. Technical SEO improves metrics through content relevance for your brand.

Increases Conversion Rate

Technical SEO improves website relevance to user queries. This means you get improved visibility and more clicks. Conversion rate metrics are critical to determining the success of your business.

Improves ROI And Reduces Cost

ROI is the most reliable measurement of an SEO pipeline. Technical SEO delivers better performance per cost for your marketing funnel. It can lower your expenses and improve results.

Increases Footfall In Your Local Store

If you have a brick and mortar, footfall is an important metric to consider. Technical SEO helps search engines target your website better. This lets more people know about your local store, which means more visits

Increases Brand Awareness In Your Niche

One way of generating more leads and business is to focus on brand awareness. It increases the visibility of your brand through relevancy. It is difficult to create buzz without an adequate technical SEO framework.

Increases Market Share For Your Company

With the high competition in the market, every brand is vying for a piece of the pie. Using relevant keywords and insights, we can help you get more customers. We also improve your market share.

Improves Website Loading Time And Other Metrics

Page load speed is one of the most vital metrics for ranking today. Only the quickest loading websites are ranked high. You cannot improve this without focusing on the specific aspects of SEO.

Improves Trust Scores And Credibility

Today’s marketing is all about trust-based methods. Technical SEO ensures that your business accrues credibility and that people trust you. This is essential to be competitive and successful in today’s business ecosystem.

Technical SEO

There are three main types of SEO: Technical SEO, On-page SEO, and Off-page SEO. Technical SEO is all about the behind-the-scenes work that needs to be done to ensure your website is in good shape for Google and other search engines.

This includes optimizing your site's code, making sure your website is mobile-friendly, and setting up proper tracking and analytics.

Improving the website's architecture, implementing best practices for crawling and indexing, and submitting a sitemap to Google also come under Technical SEO. To achieve better results, the process can be undertaken with on-page optimization and content marketing.

Hang on to this mini guide to know the ins and outs of the Technical SEO world.

Importance of Technical SEO

![]() To Make Your Site Easy to Navigate

To Make Your Site Easy to Navigate

Technical SEO adjusts a website for the crawlers and indexers that Google and other search engines use to gather information about websites.

It makes it easy for crawlers and indexers to understand the structure and content. This makes it easier for search engines to rank websites on their search results pages.

Optimizing a website's technical infrastructure makes it easier for both humans and search engine crawlers to navigate it. The optimization usually includes improving the website's architecture, code quality, internal linking, and usability.

You can use PurifyCSS Online to improve website usability.

![]() To Improve Your Site's Loading Speed

To Improve Your Site's Loading Speed

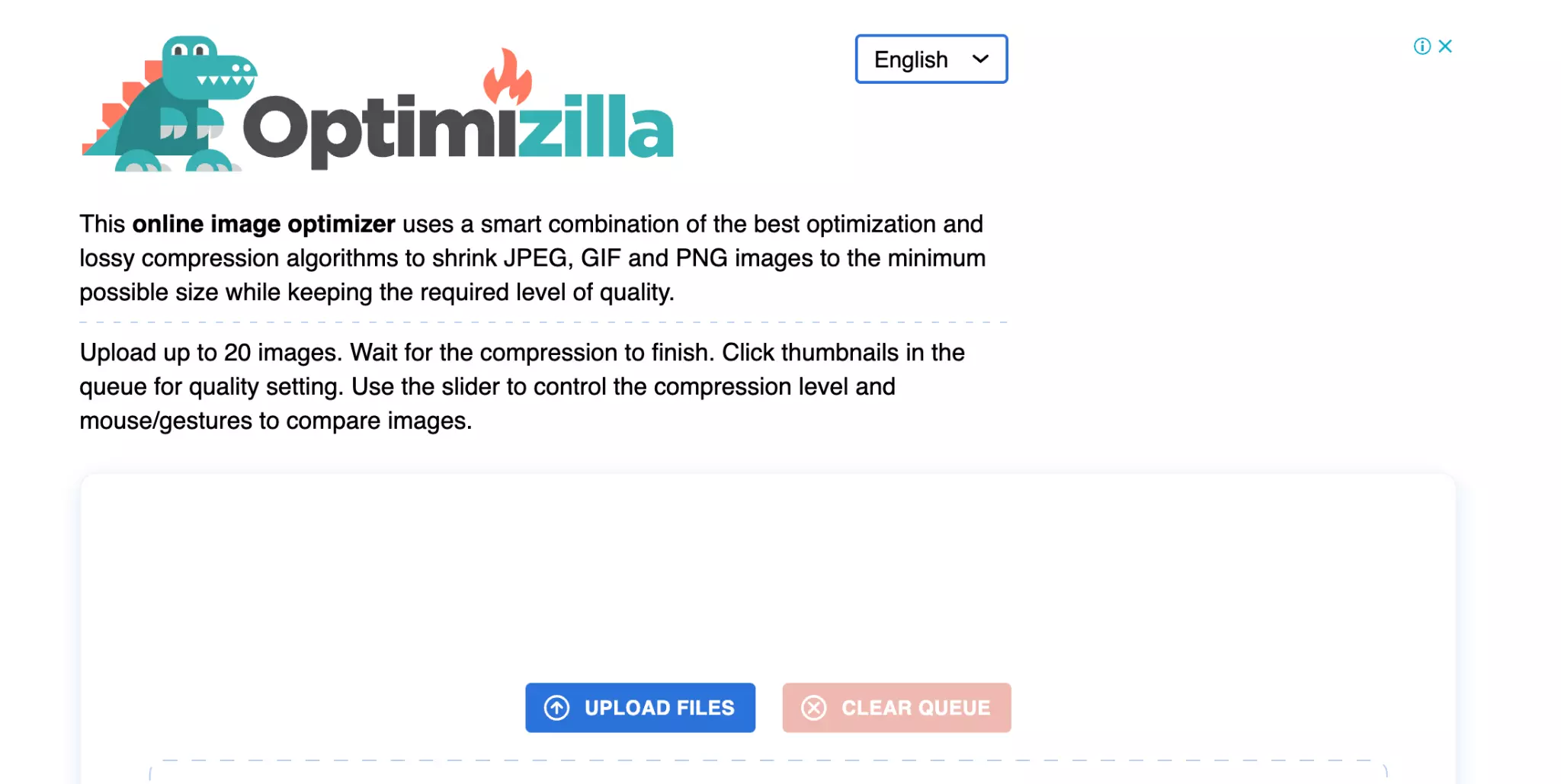

When it comes to improving site speed, Technical SEO is the key. By ensuring that a website is properly coded and structured, you can eliminate many issues that can slow it down. This includes optimizing images, reducing server response time, and minifying code.

Also, using a caching plugin can help you store a static version of your website on the visitor's computer, which will reduce the amount of data that needs to be downloaded each time they visit your site.

Other techniques include compressing images and reducing the number of HTTP requests a page makes. By improving a site's loading speed, Technical SEO can help ensure that users have a positive experience on the site and that it ranks high in search engine results pages (SERPs).

You can use an image compressor to reduce the file size of images.

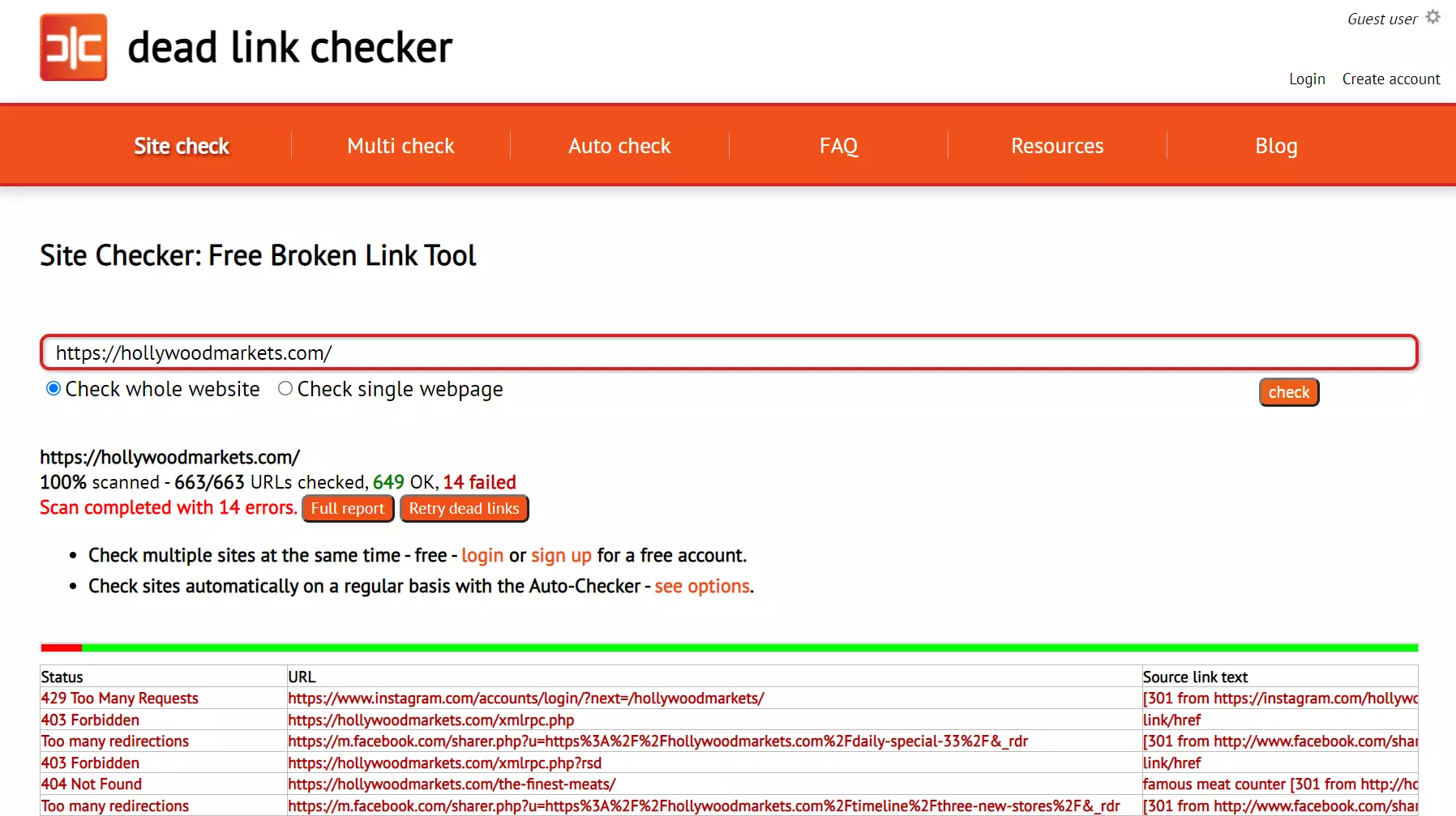

![]() To Fix Broken Links

To Fix Broken Links

Broken links can be frustrating, leading to a poor user experience. When links are broken, users may not be able to find the information they are looking for, which can impact their ability to complete tasks on the website.

Broken links can also affect a website's search engine ranking. Google uses link popularity as one of its factors in determining how high a website should rank in its search results.

Optimizers fix broken links by identifying the source of the error and then repairing the link. If the source is a page on your website, they fix it by editing the HTML code. If the source is a page on another website, they use 301 redirects to send visitors to the correct page.

You can use Online Broken Link Checker to scan your website for broken links.

![]() To Display Rich Snippets in Search Results

To Display Rich Snippets in Search Results

Technical SEO helps display rich snippets in search results by using schema.org markup. By adding specific markup to your web pages, you can indicate the specific type of content on that page to search engines.

This allows search engines to display more information about your content in the search results, such as images, ratings, and prices.

Say that you are an online retailer selling rugs. Rich snippets, aka HTML tags, can provide additional information about your products or a specific product's price and ratings right in search results.

![]() To Keep Your Site Safe and Secure

To Keep Your Site Safe and Secure

When a website is not safe or secure, it can be a major security risk for the user. This is because there is a chance that the user's personal information could be compromised if the website is not secure.

Also, if the website is not safe, there is a risk that the user could become infected with malware or ransomware. You certainly don't want to infect your website visitors' devices with malware. Don't you?

For these reasons, websites need to be both safe and secure.

If your Technical SEO is robust, your site will be less likely to experience problems like crashes or hacking and will be more resistant to malware and other online threats.

You can buy SSL certificates from multiple providers to secure your website.

![]() To Improve Your Website's Indexing

To Improve Your Website's Indexing

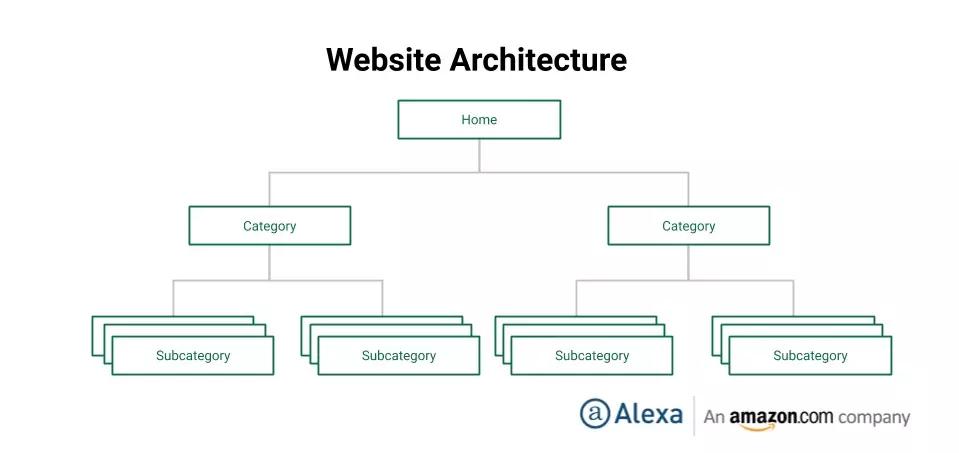

Technical SEO is the practice of configuring a website to improve its chances of being found and indexed by search engines. This can involve improving the site's structure, implementing AMP (Accelerated Mobile Pages), and other factors influencing how well it appears on SERPs.

The act also involves fixing any errors that may prevent a site from being indexed. A website can increase its visibility and attract more visitors from search engines by making these improvements.

Here’s an example of a good website structure.

![]() To Improve Your Ranking

To Improve Your Ranking

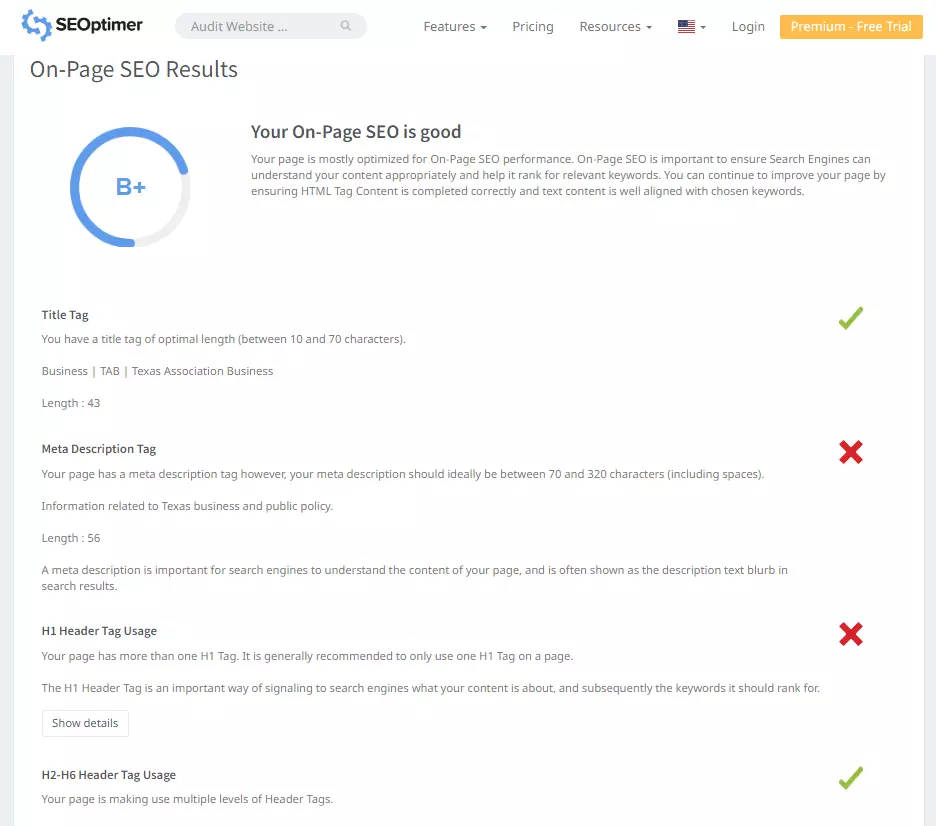

Technical SEO audits are one of the most important and effective ways to improve a site's ranking on search engines.

By identifying and resolving issues with the site's technical infrastructure, you can make it easier for search engine crawlers to index your content and understand your site's structure. This, in turn, can help you improve your site's ranking on SERPs.

The process often encompasses a variety of techniques, such as optimizing the site's structure and URL and fixing any technical issues that may be preventing the site from ranking well.

You can find and resolve issues with your website using SEOptimer.

![]() To Give a Good User Experience

To Give a Good User Experience

Technical SEO takes care of many elements, including the website's title tags, meta descriptions, h1 tags, robot exclusion protocols, and site architecture.

By improving these elements, a website's crawlability and indexability will improve.

As a result, the site will function buttery-smooth, resulting in an improved user experience.

It also blocks pop-ups, auto-playing videos, and other distracting and intrusive elements and can negatively impact a user's experience on a site.

You can use an SEO audit tool to improve the website user experience.

Technical SEO Tips

![]() Install an SSL Certificate

Install an SSL Certificate

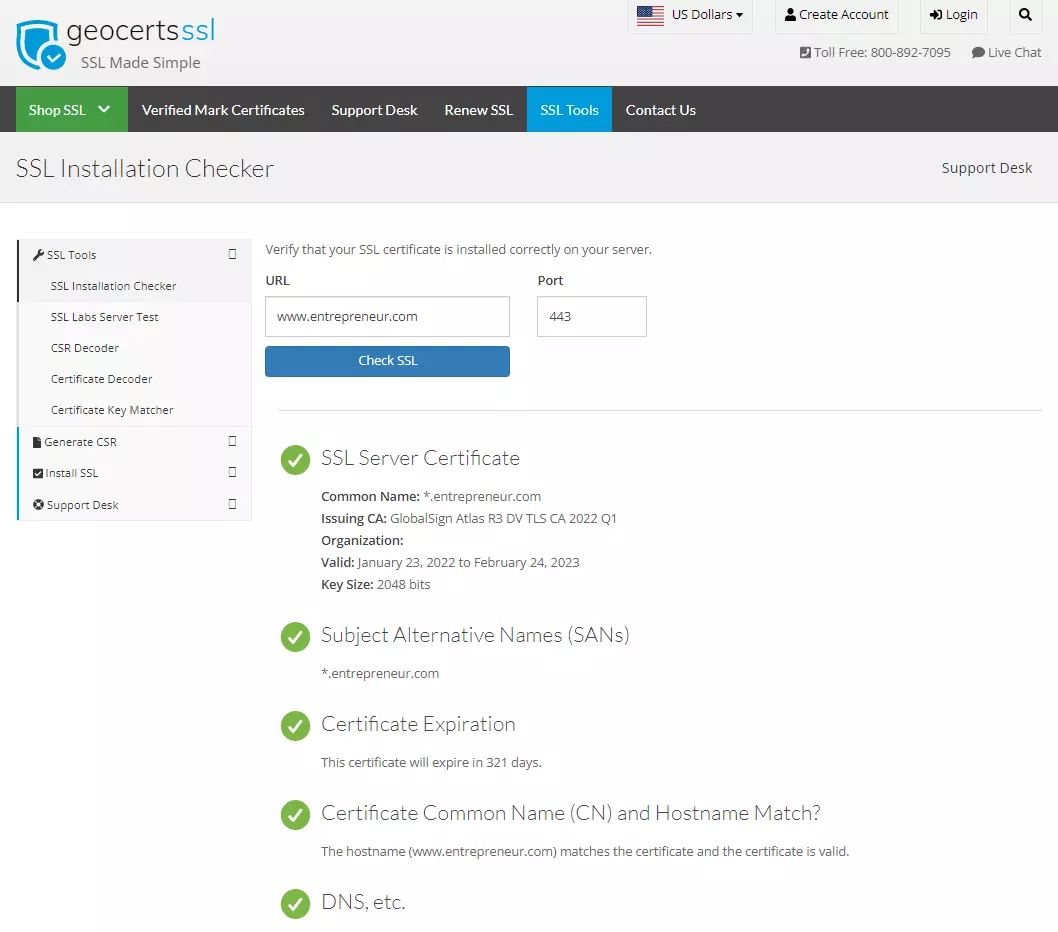

SSL certificates provide a high level of security for online businesses.

They create a secure connection between the customer's browser and the server, ensuring that all data is transmitted securely.

This helps protect customers from identity theft and fraud and ensures that their personal information is not compromised.

SSL certificates also help increase customer trust, resulting in more sales.

Installing an SSL certificate is a straightforward process, but a few things to keep in mind. The first step is to purchase an SSL certificate. There are many providers of SSL certificates, and most include a variety of features. Be sure to select one that meets your needs.

Once you have chosen an SSL certificate, you will need to generate a key pair. This is a set of two keys — a public key and a private key — that work together to encrypt and decrypt data.

The public key is used to encrypt information, and the private key is used to decrypt it. The keys should be kept secret, as they are the only way to access encrypted data.

Here Are The Three Best SSL Certificate Providers:

- Comodo - World's "largest" commercial certificate authority

- SSL - A certificate authority since 2002

- Digicert - An all-in-one website security provider

You can check if your website has an SSL certificate.

![]() Rectify Duplicate Content Issues

Rectify Duplicate Content Issues

Duplicate content can negatively affect the visibility and ranking of a website. This is because search engines may not determine which version of the content is the most relevant to users.

This can also confuse users, as they may not know which version of the content is the most accurate.

Duplicate content can also result in a loss of traffic and revenue. Therefore, it is important to fix any duplicate content issues as soon as possible. This issue can arise for various reasons, but fortunately, they're relatively easy to identify and fix.

The Most Common Causes Of Duplicate Content Are:

- URL parameters

- Session IDs

- Tracking codes

However, several other factors can contribute to the problem, including canonicalization issues, multiple versions of a page, and robots.txt files.

Once you've identified a duplicate content issue, the next step is to fix it. This can be done in several ways, depending on the cause of the problem.

Example:

Say that an issue is caused by URL parameters (such as session IDs); you can simply remove them from the URL. If multiple page versions cause the problem, you can use canonical tags to indicate which version is the primary version.

![]() Improve Page Speed Through Optimization

Improve Page Speed Through Optimization

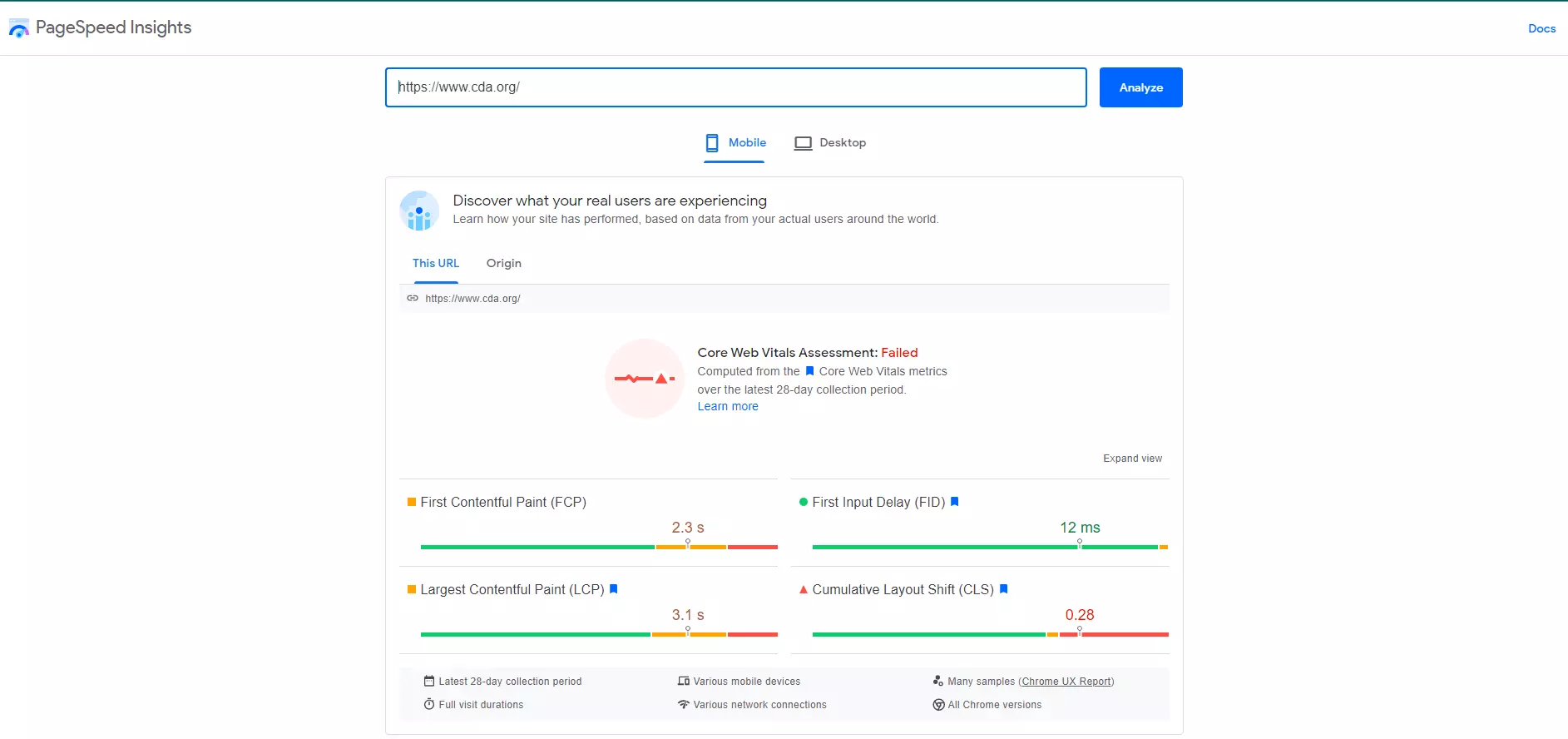

Page speed is one of the most important factors for a successful website. It impacts the user experience, but it also affects SEO. Fortunately, you can do a few things to improve your page speed.

This includes optimizing images, using a content delivery network (CDN) like Bunny, and minifying code.

- By optimizing your images using a tool like I Love Img, you can reduce the file size and improve load times.

- A CDN will help distribute your content across multiple servers, improving page speed.

- And finally, minifying code will remove unnecessary characters from your code, resulting in a faster load time.

You can also use cache plugins like WP Fastest Cache to improve your site speed. Cache plugins work by caching your website's static files, such as images, CSS, and JavaScript. This allows your webserver to serve these files faster, which results in a faster loading website.

You can also use Google’s PageSpeed Insights to optimize page speed.

These techniques can help you improve your page speed and provide a better user experience.

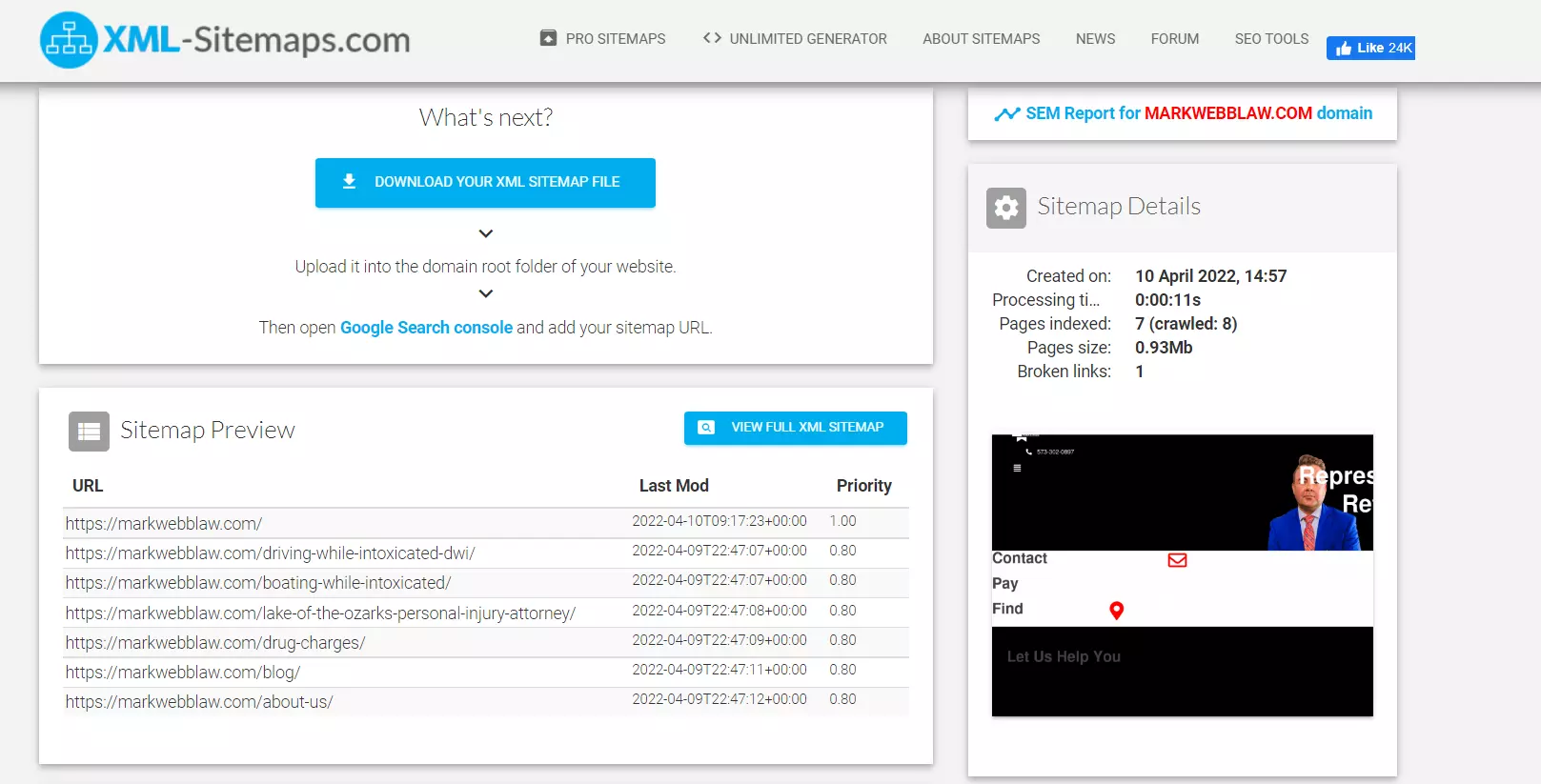

![]() Create an XML Sitemap

Create an XML Sitemap

An XML sitemap is an extremely important part of any website. It helps search engines better understand the hierarchy and structure, making it easier to index pages correctly.

Also, it can help improve the visibility and ranking of a website in search results. An XML sitemap can also help track how well a website is performing and identify any potential issues that need to be fixed.

To create an XML sitemap, you'll need to use a special software program or online tool. There are many different programs and tools available, so be sure to choose one that fits your needs.

Once your XML sitemap is created, you'll need to submit it to Google and Bing.

Tip: Use a tool like XML Site Map Generator and Sure Oak to create a sitemap for free!

![]() Add Structured Data Markup

Add Structured Data Markup

Structured data markup is a system for marking up content on a web page so that the search engines can understand it better. This is done by adding special codes around the words and phrases you want the search engines to pay attention to.

When the search engine sees these codes, it knows to treat the content as structured data, which gives it a higher priority in the search results.

There Are Several Benefits To Using Structured Data Markup.

- First, it can help your website rank higher in the search results.

- Second, it can help improve your click-through rate (CTR) because people are more likely to click on links at the top of the search results.

- Third, it can help you create richer and more informative search results pages, increasing your conversion rate.

Three Helpful Tools:

- Merkle - Schema Markup Generator

- Schema.org - A community to create, maintain, and promote schemas for structured data

- Rank Ranger - Structured data generator tool to easily create FAQ Page, How-to articles, Job Postings, etc.

![]() Optimize Your Internal Links

Optimize Your Internal Links

Internal link optimization improves the ranking of pages on your website by optimizing the links between them. The main advantage of internal link optimization is that it can help you improve your website's search engine ranking.

You create a "web of trust" that tells the search engines that your website is a valuable resource by linking related pages together. Internal link optimization can also help you improve click-through rates (CTRs) and keep visitors on your website longer.

It aims to create a logical flow of information that helps users navigate your website and find the information they are looking for.

Tips:

- Use keyword-rich anchor text

- Use follow links

- Place CTA buttons on sales, aka landing pages.

![]() Fix Crawl Errors

Fix Crawl Errors

Crawl errors are caused when a website's server cannot communicate with Google's crawler. This can be due to several reasons, such as a website being down, incorrect settings, or a firewall blocking Google's crawler.

Many reasons why a website might not be crawled and indexed properly by search engines. Fixing crawl errors is one way to ensure that your website appears as high as possible on SERPs.

You can do several things to fix crawl errors, including checking your robots.txt file, ensuring your website is accessible from multiple locations, and ensuring your website's code is error-free.

The most common errors are caused by incorrect robots.txt files, which tell crawlers which parts to ignore, and incorrect meta tags, which provide information about the website to search engines.

You can use a crawlability test tool to identify crawl errors.

![]() Fix Broken Links

Fix Broken Links

It's happened to all of us. We're cruising along on the web, reading an interesting article, or browsing through some pictures, when suddenly we click on a link, and nothing happens. The page doesn't load, and we're left with a frustrating dead end.

This can be due to many things:

- The page may have been deleted since the link was published

- The site may have gone offline

- The link may be broken

Broken links are undoubtedly a frustrating part of the web. They can occur when a page is deleted, a site moves, or a URL is changed.

Thankfully, there are several ways to fix broken links. The most common method is to use a free link checker tool such as Broken Link Check and Dead Link Checker to find the broken links on your site and then replace them with working ones.

Here’s a screenshot of Dead Link Checker.

![]() Disavow Bad Backlinks

Disavow Bad Backlinks

This is a process by which website owners can disavow toxic links to their site from sources they believe contribute to spam or Negative SEO.

By disavowing these links, site owners can clean up their link profile and protect their site from being penalized by Google.

Removing these links can help improve your website's SEO because it will reduce the number of bad links pointing to your site. This, in turn, will tell Google that your site is high quality and trustworthy. To disavow a link, you can take the help of Google's Search Console.

Spammy and dangerous links hurt a website's ranking and visibility. Toxic backlinks can even lead to a website being penalized by Google. This can cause a lot of damage to a business, as it will lose traffic and visibility.

The best way to avoid this is to use a tool like Monitor Backlinks, which will help you identify and disavow any dangerous links.

Here’s a screenshot of Monitor Backlinks.

![]() Enable AMP

Enable AMP

Accelerated Mobile Pages, or AMP, is a project started by Google to improve the mobile web experience.

AMP pages are designed to be lightning-fast and look great on any device. They are created using a special set of HTML tags and rules and can be hosted on Google's servers for free.

Here’s a screenshot of Monitor Backlinks.

This results in a simplified, lightweight version of a web page that can be loaded more quickly on mobile devices. Since most internet usage takes place on mobile devices, web pages need to be as fast and responsive.

AMP achieves speed by restricting certain technologies like JavaScript and external stylesheets and caching static assets like images and videos. It also uses a simplified layout that eliminates extraneous code and bulky images. This will encourage your visitors to come back often.

For example, The Washington Post increased its returning visitors by 23% using AMP.

A web page can load in less than one second, even on a 3G network. This improved performance is particularly beneficial for mobile users, who are often on slow networks or have data caps.

![]() Ensure Mobile-friendliness

Ensure Mobile-friendliness

Making a website mobile-friendly is important for SEO purposes and the site's overall usability. There are a few technical tips that can help make a website mobile-friendly.

First, be sure to use a responsive design template for your website. This will ensure that the site's layout adjusts automatically to fit any screen size.

Also, you should use Media Queries (an HTML/CSS functionality) to control the layout of specific pages or elements on your site. This will allow you to tailor the look and feel of the site specifically for mobile devices.

You should also consider using smaller images and fonts, as these will load faster on mobile devices. And lastly, be sure to test your site on different mobile devices and browsers to ensure compatibility.

Platforms like Browser Stack or Rank Watch come in handy to accomplish the task.

Here’s a screenshot of Browser Stack.

![]() Do Regular Site Audit

Do Regular Site Audit

Periodical site audits are important for several reasons. First and foremost, they allow you to track your site's progress and ensure that it is continuing to meet your business goals.

They also help you find and fix any errors or problems developed since your last audit.

Another benefit of regular site audits is that they help you identify new opportunities for optimization and improvement. Not to mention that they help you to stay compliant with web standards and best practices laid down by engines like Google.

Several different software programs can help you do a website technical audit. One of the most popular is called Screaming Frog. It's a desktop application that you can download for free!

Once you have it installed, you can simply enter the URL of the website you want to analyze, and the program will crawl all of the pages on the site. It will then report various technical issues, such as broken links, 404 errors, and duplicate content.

Platforms like SE Ranking also offers free technical audit service.

Here’s a screenshot of SE Ranking.

Technical SEO Mistakes People Make

![]() Missing Alt Tags

Missing Alt Tags

An alt tag is an attribute of an image used to describe the image for people who cannot see it. Search engines also use them to help index images.

Many web developers and designers are still not using alt tags on their images, leading to a number of problems. For example, if an image is used for a link and the image can't be displayed, then the user won't know where the link goes.

Also, missing alt tags can be extremely frustrating for users of assistive technologies (people who are visually impaired or who are using a screen-reader).

Tools that you may need for a solution:

- SEOptimer — Image Alt checker

- AdResults — A tool to get an overview of images with Alt Text

- Rushax — A tool to find the images without Alt Tag attributes

Here’s a screenshot of Rushax.

![]() Incorrect Robots.txt

Incorrect Robots.txt

Robots.txt is a text file used to instruct web crawlers on which pages on a website should not be indexed or crawled. It can also provide other information, such as how often a page should be crawled or how deep into the website the crawler should go.

Mistakes while creating robots.txt files will decrease website traffic and visibility.

Here’s what a robots.txt file looks like.

There are a few common mistakes people make when creating a robots.txt file:

- Placing the file in the wrong directory

- Still using NoIndex Directive

- Not maintaining case sensitivity

- Too much use of trailing slash

![]() Ignoring Broken Links

Ignoring Broken Links

Broken links are those that lead to web pages that no longer exist. Ignoring them will lead to web crawlers not following the link. As a result, the specific web page will not be indexed.

This can be problematic because it can lead to dead ends for users and make a website appear to have fewer pages.

Broken links can be detrimental to a website's SEO and user experience. Webmasters should routinely check their websites for broken links and fix them. One way to find broken links is to use a tool like Dr Link Check or Xenu's Link Sleuth.

Here’s a screenshot of Dr Link Check.

![]() Not Optimizing Meta Descriptions

Not Optimizing Meta Descriptions

Search engine algorithms are designed to rank web pages based on various factors, including the relevance and quality of the content on the page.

However, they also consider other factors, such as the page's title, the keywords used on the page, and the meta descriptions.

The meta descriptions are not as important as the pages' content, but they can still influence how a page ranks in search engine results.

There are a few things to keep in mind while optimizing meta descriptions:

- Keep them concise - around 155-160 characters is ideal.

- Include your target keyword, but don't sacrifice readability for keyword placement.

- Make sure they accurately describe the content of the page.

- Include a CTA, if appropriate..

Here’s an example of a Meta Description.

![]() Giving a Skip to HTTPS Security

Giving a Skip to HTTPS Security

HTTPS protocol creates an encrypted connection between the server and the browser, making it difficult for anyone to intercept the traffic and steal sensitive information. However, many websites do not use HTTPS because they believe it is unnecessary.

Without HTTPS, a website is vulnerable to man-in-the-middle attacks and eavesdropping.

In a man-in-the-middle attack, the attacker inserts himself between the victim and the server, allowing him to intercept all of the traffic. This can steal passwords, credit card numbers, and other sensitive information.

Three platforms to ensure HTTPS protocol:

- Thawte — First authority to issue SSL certificates to public entities outside the US

- GoDaddy — Comes with firewall and malware protection

- GeoTrust — Powered by DigiCert, GeoTrust is known for its industry-leading support

GeoTrust offers the following types of SSL certificates.

![]() Inadequate/No Structured Data

Inadequate/No Structured Data

Structured data is important for many reasons. One reason is that it provides a way to semantically link related data items together. This is important because it allows search engines and other applications to understand the relationships between data items.

When data is not structured, it becomes difficult to find and use. This can hurt the usability of the data and the overall user experience.

Also, unstructured data can increase the size of a website's files, leading to longer load times and decreased performance. It can also clutter a website's codebase, making it more difficult to maintain and update.

The more unstructured your data is, the more complex it becomes to manage and mobile, PERIOD.

Three helpful tools:

- Schema.org — To test your structured data

- Google — Structured Data Markup Helper

- Technical SEO Schema Markup Generator

![]() Using Unfriendly URLs

Using Unfriendly URLs

SEO-friendly URLs are an important part of on-page Technical SE optimization.

Using specific keywords in your URL can help improve your website's ranking on search engines. Also, using clean and concise URLs makes it easier for users to remember the web address of your page and share it with others.

SEO-friendly URLs are typically short, keyword-rich, and contain no special characters or symbols. Rather than a long string of numbers and letters, they use words that accurately describe the page's content.

For instance, the URL “https://www.example.com/products/cbd-gummies” is more SEO-friendly than “https://www.example. com/page?id=1234&type=5&subtype=6".

The latter is hard to remember and type in, and it doesn't include any keywords that people might use to find the products.

You can use SEOptimer’s SEO Friendly URL Checker to find if your URL is unfriendly.

![]() Ignoring Meta Descriptions

Ignoring Meta Descriptions

Meta descriptions are a valuable tool for increasing the visibility of your product. They are the brief snippets of text that appear under the title of a search result, and they provide potential customers with a sneak peek at what they can expect if they click on your listing.

By including keyword-rich descriptions that accurately describe your product, you can entice more people to click through to your page.

Additionally, meta descriptions can help you boost your ranking by providing you with another opportunity to include relevant keywords. Read this Google article to control your snippets using meta descriptions

When you ignore meta descriptions, the search engine will automatically create its own, which may not accurately reflect the page's content.

This can lead to people not finding your page through a search engine, or worse, finding it but not being interested in the content because the description didn't accurately reflect what the page was about.

You can use Yoast to fill in Meta Descriptions.

![]() Underestimating CDN

Underestimating CDN

There are many benefits to using a CDN, so it's important not to ignore its potential. CDN services are often used to speed up the delivery of static files to customers.

Example:

Say that your website uses images or videos hosted on a separate server in Arizona. Using a CDN can speed up the delivery of those files to your customers in London. The CDN will host a copy of the files on servers close to your customers.

Another advantage of using a CDN is that it can help reduce the server's load. The CDN can take some of the load off your server by hosting copies of your files. This can be especially helpful if your website receives a lot of traffic.

![]() Bad Site Architecture

Bad Site Architecture

Many companies skimp on important elements such as site architecture for website design. This can lead to a frustrating user experience, as customers are forced to search through numerous pages to find the information they need.

On the other hand, good site architecture makes it easy for users to navigate your website and find what they're looking for. It can also help improve your search engine ranking since search engines consider the ease of navigation when ranking websites.

Tips:

- Include clear and concise menus

- Well-organize your product pages

- Ensure a logical checkout flow process (If yours is an ecommerce site)

![]() Not Using an XML Sitemap

Not Using an XML Sitemap

An XML Sitemap is a great way for businesses to ensure that their website is crawled and indexed by search engines. Despite the many benefits of using XML sitemaps, many website owners do not create them or do not use them correctly.

A well-constructed XML sitemap can provide search engines with information about all the pages on your website. This information can help them better understand your website and improve its indexing and ranking for relevant keywords and phrases.

Tip: While creating an XML sitemap, include all the important pages on your website, including the home page, product pages, and blog posts. You should also use the correct format and follow the guidelines provided by Google and other search engines such as Bing.

You can use this tool to generate a sitemap.

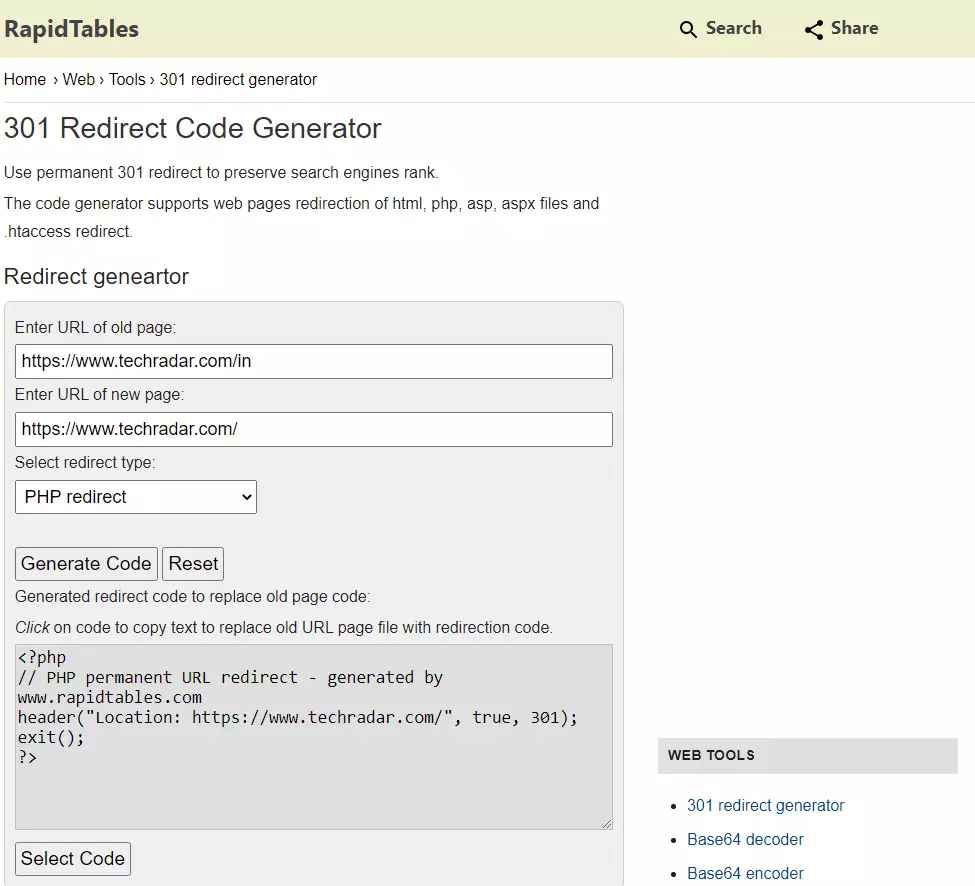

![]() Not Setting up 301 Redirects for 4xx Errors

Not Setting up 301 Redirects for 4xx Errors

The 4xx errors are a series of HTTP status codes that indicate that the client's request could not be fulfilled. The most common 4xx error is "404 Not Found", which means the requested resource could not be found on the server. Other 4xx errors include "403 Forbidden", "405 Method Not Allowed", and "410 Gone".

404 errors are caused when a user attempts to access a page on a website that doesn't exist. This can negatively affect a website's performance by causing the server to send back a 404 error message instead of the requested page.

As a result, the user will wait longer for the page to load, increasing the number of pages the user visits during their session.

Tip: Setting up 301 redirects for 404 errors ensures that users are redirected to a relevant page on your website.

You can use this tool to create 301 redirects.

Important Technical SEO Statistics:

- Google displays Meta descriptions in SERPs only 37.22% of the time

- Featured snippets contribute to 40.7% of voice search results

- A whopping 66.31% of online pages do not have any backlinks

- Long-form content earns 77.2% more backlinks than short backlinks

- At least 33,549,052 websites use CDN technology in the US

- 25% of pages could save more than 250KB by compressing images

- 46 million websites use SSL certificate

Technical SEO FAQs

Is Technical SEO Overrated?

Technical SEO is often seen as an overrated practice because it can be difficult to measure its impact on site traffic and conversions. However, it is still an important part of any SEO strategy, as it helps to ensure that a website is properly optimized for search engines.

What Are The Components Of Technical SEO?

The components of Technical SEO include but are not limited to:

Optimizing website code, structure, and content for crawlers.

Setting up proper sitemaps and robots.txt files.

Ensuring correct usage of hreflang tags.

Troubleshooting indexation issues.

Fixing any crawl errors.

Is Website Architecture That Important?

Developing an SEO-friendly website architecture ensures all important pages are easily accessible to crawlers and internal links between relevant pages.

Where Can I Learn About Technical SEO?

There are a variety of sources that can provide information on Technical SEO. One such source is Google's Webmaster Guidelines, which outline how Google views websites and provides advice on ensuring that your site is crawled and indexed correctly.

What Is The Difference Between Technical SEO And Traditional SEO?

Technical SEO includes improving the website's structure and technical factors such as page speed and crawlability. Traditional SEO is the process of optimizing a website for organic search traffic. This includes optimizing the website's content and keywords.

How does Technical SEO Helps Improve My Site Speed?

Technical SEO improves site speed by ensuring that the website's code is clean and efficient. This involves removing unnecessary code, compressing images, and minifying scripts. Another way to improve site speed is to use a CDN, which caches static files on servers worldwide.

How Can I Improve My Website's Security?

One option is to use a Secure Sockets Layer (SSL) certificate. This encrypts the data sent between the customer's computer and your website, making it much more difficult for someone to intercept and read.

You can also install firewalls and anti-virus software on your computer systems to help protect them from attack.

What Are The Best Ways To Optimize My Website's Structure?

One way is to create internal links between pages on the site. This will help Google and other search engines index the pages more easily. It's also important to use a hierarchical structure for the website, with a clear and concise navigation bar.

What Are 301 Redirects?

301 redirects tell browsers and search engines that a page has been permanently moved to a new location. They're often used when a page has been deleted or when a site is restructured.

How To Optimize Images For Search Engines?

Be sure to use appropriate file formats. JPEGs are good for photos and other graphics, while PNGs are better for logos and text-based images. Other basic methods include using the correct file name, optimizing alternate text, and reducing the file size.

How To Set Up 301 Redirects Properly?

To set up 301 redirects properly, you'll need to create a file called .htaccess at the root of your website. This file contains instructions for the webserver on handling requests for specific pages.

How To Fix Crawl Errors?

Crawl errors can be fixed by repairing the website's robots.txt file and then requesting that Google re-crawl the website. If there are any errors in the robots.txt file, it can cause Google not to index some or all of the website's pages.

How To Improve Indexing?

Ensure all web pages are properly formatted for search engine crawling and indexing. This means using clear, concise titles and metatags and ensuring that all content is crawlable and indexable.

What Are Some Best Technical SEO Practices?

Best technical SEO practices include:

Using a sitemap

Ensuring that your website is crawlable

Optimizing your images

Avoiding complex JavaScript and Flash

How To Fix Indexing Issues?

It is important to troubleshoot the problem and identify the root cause to fix website indexing issues. Common causes of website indexing issues include problems with the robots.txt file, duplicate content, and 4xx errors.

How do CDNs Improve My Site Speed?

CDNs improve speed by hosting copies of website content on servers located worldwide. When a user requests a page, the CDN server closest to them will deliver the content, resulting in a faster load time.

What Are Some Common Errors In Technical SEO

Some common errors in Technical SEO are not properly configuring the website's server, not using the correct hreflang tags, and not using structured data.

Not configuring your website's server can lead to crawlability issues. Not using the correct hreflang tags can result in incorrect international targeting.

Is Disavowing Unwanted Backlinks Critical?

If any links to the site are deemed inappropriate or irrelevant, disavowing them will help clean up the link profile and improve the site's overall search engine ranking.

How To Fix Duplicate Content Issues?

There are a few ways to fix duplicate content issues on a website. One way is to use a canonical tag. This tag tells search engines which page version they should show in their search results. Another way is to use a 301 redirect. This redirects users from one page to another and tells search engines that the original page has been moved.

How To Find Broken Links?

One method of detecting broken links on a website is to use a tool such as Xenu's Link Sleuth. This tool allows you to scan your website for broken links and provides you with a results report.

Do 404 Errors Affect My Site Ranking?

Google uses various signals to determine how well a page ranks in its search results, and 404 errors are not typically one of those signals.

Does Technical SEO Cost More?

The cost of Technical SEO will vary depending on the size and complexity of a website and the specific services required. However, technical SEO is not a cheap investment and can require time and effort to implement correctly.

Final Thoughts:

Alright, it's time to wrap up this marathon guide.

Remember always to test any changes you make before implementing them on your live site. Use the tools and techniques mentioned above to get the most accurate results.

- Technical SEO

- Importance of Technical SEO

- To Make Your Site Easy to Navigate

- To Improve Your Site's Speed

- To Fix Broken Links

- To Display Rich Snippets

- To Keep Your Site Safe and Secure

- To Improve Your Website's Indexing

- To Improve Your Ranking

- To Give a Good User Experience

- Technical SEO Tips

- Install an SSL Certificate

- Rectify Duplicate Content Issues

- Improve Page Speed

- Create an XML Sitemap

- Add Structured Data Markup

- Optimize Your Internal Links

- Fix Crawl Errors

- Fix Broken Links

- Disavow Bad Backlinks

- Enable AMP

- Ensure Mobile-friendliness

- Do Regular Site Audit

- Technical SEO Mistakes

- Missing Alt Tags

- Incorrect Robots.txt

- Ignoring Broken Links

- Not Optimizing Meta Descriptions

- Giving a Skip to HTTPS Security

- Inadequate/No Structured Data

- Using Unfriendly URLs

- Ignoring Meta Descriptions

- Underestimating CDN

- Bad Site Architecture

- Not Using an XML Sitemap

- Not Setting up 301 Redirects

- Important Technical SEO Statistics

- Technical SEO FAQs

To Make Your Site Easy to Navigate

To Make Your Site Easy to Navigate